Applied AI researcher with 4+ years of experience specializing in Data Science, Machine Learning, and Computer Vision.

Experience includes academic and industry collaborations through UKRI-funded projects and international research partnerships. I hold an MSc in Artificial Intelligence from Manchester Met University, graduated with distinction honors.

I also worked as a Senior AI Researcher at Manchester Met University, contributing to MRI image analysis and electromyography signal research.

At the University of Leeds, I was a Data Science Graduate RA, analyzing eye-tracking and shopping behavior data in collaboration with Ocado.

At Northeastern University USA, Analyzed object detection models (YOLO, Detectron2, Faster RCNN, etc.) for different goals. Implemented object detection/counting/tracking using PyTorch, OpenCV, Supervision, Ultralytics, and NumPy.

Published peer-reviewed research in IEEE and Springer, conducted over 150 technical reviews for top journals like ACM/IEEE/Springer/Elsevier, etc.

Received a UK Global Talent Endorsement from Tech Nation. I am passionate about advancing AI research and delivering innovative real-world applications.

Collaborate with research team to ensure effective comparison of electromyography signals and MRI images. Lead development of analysis code for bioelectrical signals and medical images using MATLAB and Python. Support research exploring the relationship between muscle structure, function, and chronic pain.

UKRI-funded research project in collaboration with Ocado/M&S for eye-tracking shopping data analysis. Quant data analysis including shopping details (purchased products, money spent, fixes on pictures/price).

Collaborated with administrators/editors; strong multitasking; meeting deadlines; keen eye for details. Conducted reviews of 150+ submitted articles, ensuring standard compliance and offering valuable guidance.

Represented the University at recruitment events, assisted with registration, and facilitated workshops, showcasing my course to prospective students; received positive feedback for effective communication/interpersonal skills.

Contributed to ML/DL applications projects, demonstrating proficiency in computer vision & data processing. Published research findings in top-tier journals and conferences.

Successfully clarified complex concepts for 40+ BSc students, resulting in a 15% improvement in overall student understanding.

Designed and developed educational websites, collaborated in research associations, and analyzed chat group data using Python telegram chat statistics.

To be submitted soon

September 2023 - November 2024

September 2022 - September 2023

September 2017 - November 2021

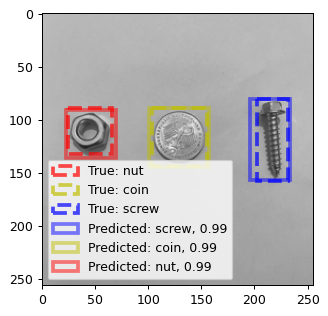

Collected metal objects datasets.

Applied feature extraction with HOG and LBP.

Extracted and saved the desired features for labeled images in the form of a feature matrix.

Classified with k-NN and SVM and Naïve Bayes.

Examined the resulting confusion matrix, the performance of HOG and LBP were compared in the classes.

Trained and tested several models with DL techniques including YOLOv5, Retina Net, Detectron2, Faster R-CNNVGG16, VGG19, and AlexNet were implemented on the dataset.

Collected handwritten logic circuits datasets.

Augmented the pictures.

Compared the performance of different deep learning methods: YOLO, Faster R-CNN, RetinaNet, Detectron2 on the dataset.

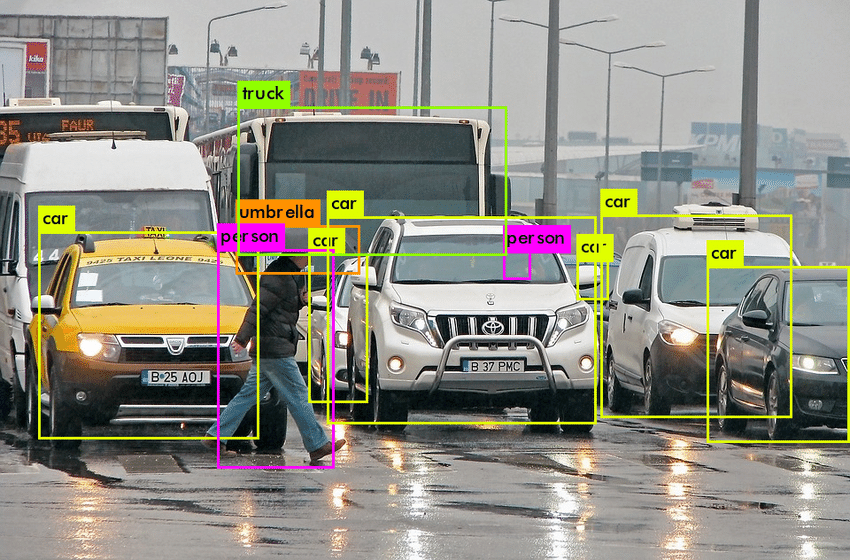

I trained a YOLO model on a video footage capturing vehicular movement.

The video contains various types of vehicles traversing a road scene.

Utilizing YOLO and perspective transformation techniques, the code identifies and tracks vehicles in each frame.

Vehicle positions are extracted and connected, allowing for the estimation of their speeds.

The final output is a video showing annotated vehicle speeds, providing insights into the dynamics of traffic flow.

This project utilizes a VGG16 model to classify chest X-ray images into COVID, Normal, and Viral Pneumonia categories.

Preprocesses the COVID-19 Image Dataset obtained from Kaggle to create training and testing sets.

Adapts the VGG16 architecture with modifications for classification and compiles it using Adam optimizer and categorical crossentropy loss.

Applies data preprocessing techniques including rescaling, rotation, and zoom using Keras ImageDataGenerator.

Trains the model for 40 epochs and evaluates its performance on the test set, analyzing accuracy and loss metrics for validation.

This project employs a Convolutional Neural Network (CNN) to detect breast cancer using the Breast Ultrasound Images Dataset, which comprises benign, malignant, and normal breast ultrasound images.

Utilizes the Breast Ultrasound Images Dataset downloaded from Kaggle, preprocessing it to exclude normal images for segmentation and classification.

Designs a CNN architecture for image segmentation with encoder and decoder blocks, compiled using Adam optimizer and soft dice loss function.

Evaluates the trained model on the test set, computing metrics such as sensitivity, specificity, accuracy, PPV, and NPV for individual images and overall performance.

Plots the Receiver Operating Characteristic (ROC) curve to assess the model's discrimination ability between classes.

I trained MediaPipe and OpenPose models on a YOUTUBE video.

This video is about dancing along to 34 MINUTES of KIDZ BOP dance.

The included codes, which is in form of a IPython notebook, gets the video and performs preprocessing.

Then, applying different layers, the key points of the human body will be extracted in each frame of the video.

After that, the key points will be connected together, creating the estimated pose of the person.

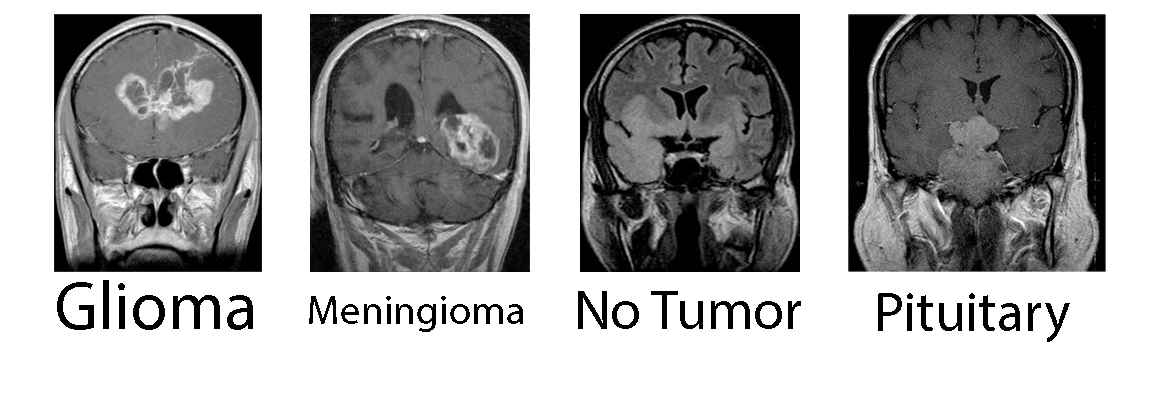

This project implements deep learning models to classify brain tumors from MRI images.

Preprocesses the dataset to remove redundant backgrounds and restructures the dataset.

Configures model architecture and training parameters in config.py.

Trains the models using the specified configurations.

Evaluates the trained models on test data to assess classification performance.

I trained yolov5s on the WIDER face dataset.

The WIDER dataset comprises of 390k+ face pictures, with bounding boxes and label formats.

The faces with area of less than 2 percent of the whole image are considered.

Finally, the model is trained on the dataset; the final accuracy on the validation dataset is 93.6%

Face detection in this project is done in real-time using Haar cascades.

Sentiments are analyzed based on the VGG-Face model and cosine similarity used in Facebook's DeepFace framework.

DeepFace is originally designed to handle the dominant face expression in the frame, but some modifications have been made to cope with multiple faces.

Created a database and populated each telegram message in the DB.

Look up for the keyword in the search bar and show the messages including the content.

Augmented the dataset pictures.

Compared the performance of different deep learning methods: YOLO, Faster R-CNN, RetinaNet, Detectron2.